Docker Basics

Docker Images are like packages or templates used to create one or more containers. Containers are meant to run a specific task or process, once the task or process is finish then the container exits.

You only need to specify the first few container id characters (as long as they differ from other containers).

Basic Commands

-

Run a container -

docker run <name>- Pulls image down from dockerhub if not found locally

- Uses the same image for subsequent commands

-

List running containers -

docker ps- List all containers (running or not) -

docker ps -a

- List all containers (running or not) -

-

Set the name of a container -

docker run —name <name> <container> -

Stop a container -

docker stop <container-name/container-id> -

Remove a stopped/exited container -

docker rm <container-name/container-id>- Printing the name again means it works

-

View additional docker container info -

docker inspect <container>(JSON format) -

Execute a command on a running container -

docker exec <container-name/container-id> <command-to-execute> -

Run a container in the background -

docker run -d <container-name/container-id>-

Attach back to the background container -

docker attach <container-name/container-id>

-

-

Find local docker images -

docker images -

Remove an image -

docker rm <image-name/image-id>- Must stop & delete all dependent containers to remove image

-

Download an image without running -

docker pull <image-name>

Docker Run

- Use tags to run specific container versions -

docker run image:1.0 - Listen to standard input (STDIN) -

docker run -it <container> - Port mapping -

docker run -p <localhost-port>:<container-port> <container>- E.g. -

docker run -p 80:5000 container - You can run multiple instances and listen on different ports

- E.g. -

- Volume mapping to persist data -

docker run -v <local-dir>:<container-dir> <container>- E.g. -

docker run -v /opt/datadir:/car/lib/mysql mysql

- E.g. -

- View container logs -

docker logs <container>

Docker Images

- Create your own image:

- Create a

Dockerfilewith the instructions on what commands to run in order - Build your image -

docker build Dockerfile -t username/<app_name> - Push to docker hub -

docker push username/<app_name>

- Create a

- Create an environment variable:

- In code -

color = os.environ.get(’APP_COLOR’) - In host -

export APP_COLOR=blue; python app.py - Run container using environment variable -

docker run -e APP_COLOR=blue <container_name>

- In code -

ENTRYPOINTis likeCMDexcept whatever you specify at the end of the docker command will be appended to the end of theENTRYPOINTCMDcommands get replaced completely

- Use both of them to have a default command(

CMD) that runs if no instruction is provided

Docker Compose

-

Link two containers together -

docker run -d —name=vote -p 5000:80 —link <container_name>:<name_that_vote_container_looking_for> voting-app- Creates an entry on the

/etc/hostsfile onvoting-app - Using links this way is deprecated (better alternatives are available)

- Creates an entry on the

-

Bring up entire application stack -

docker-compose up -

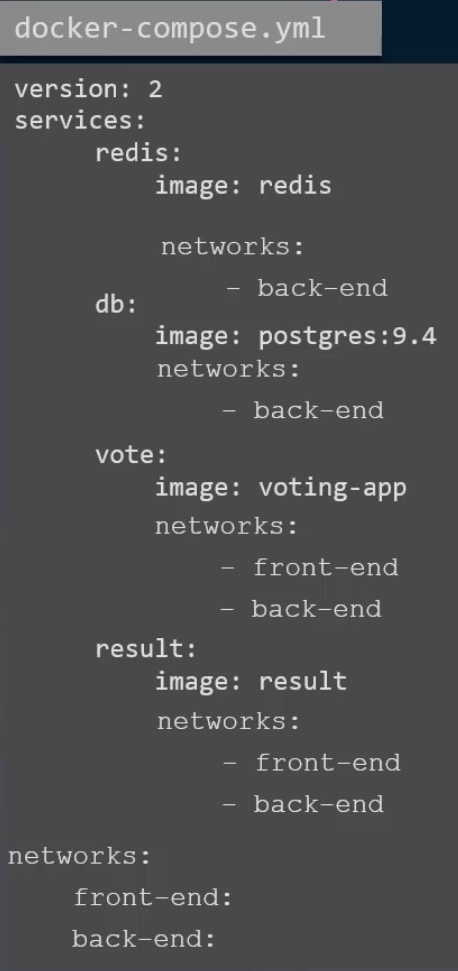

Use networks to separate back-ends and front-ends

-

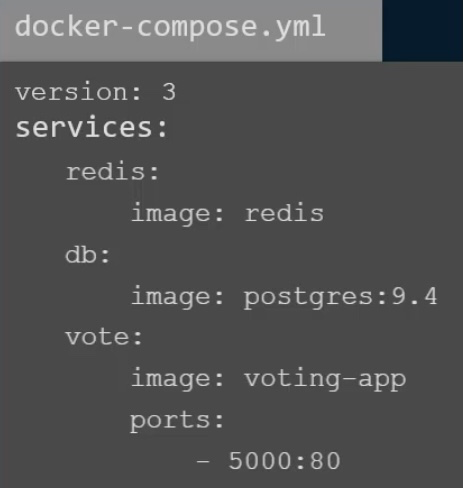

Version 3 of Docker Compose automatically creates a network that connects all containers (if none are specified)

-

postgres containers now require -

1environment: 2 POSTGRES_USER: “postgres” 3 POSTGRES_PASSWORD: “postgres”

Docker Engine, Storage

- Docker Engine is made up of: Docker CLI, REST API, Docker Daemon

- Storage drivers are automatically chosen based on the underlying OS- AUFS, ZFS, BTRFS, Device Mapper, Overlay, Overlay2

- Run docker on a remote host-

docker -H=remote-docker-engine:port run <container>- E.g.

docker -H=10.123.2.1:2375 run nginx

- E.g.

- Restrict the amount of resources a container can use (by default is unlimited)-

docker run —cpus=.5 —memory=100m container_name- .5 = 50% and m = megabytes

- Docker by default stores all data on

/var/lib/docker - Docker uses cache which saves time and storage by not using cached layers for similar containers

- Image layer data is read-only (modifiable via copy-on-write), container layer data is read-write

- Persist container data (volume mounting)-

docker volume create <data_volume_name>< on hostdocker run -v <data_volume_name>:/var/lib/mysql mysql

- Persist container data from any location (bind mounting)-

docker run -v /full/path/to/other/<data_volume_name>:/var/lib/mysql mysql- More modern mounting command -

docker run —mount type=bind, source=/host/dir, target=/container/dir <container_name>

Docker Networking

- See available networks -

docker network ls - See what network the container is attached to -

docker inspect <container> - 3 networks creating automatically when a container is created

- Bridge- default network a container gets attached to (only 1 created by default)

- None- no access to other containers or external network

- Host- no network isolation between the docker host and the container (no port mapping or running hosts on the same port)

- E.g.

docker run ubuntu —network=none/host(bridge is default)

- Create a custom bridged network -

docker network create —driver bridge —subnet IP/mask --gateway IP/mask <custom-network-name> - Docker has a DNS server at

127.0.0.11which allows container resolution using container names rather than IP addresses

Docker Registry

- Central repository of docker images

- Deploy a private registry:

- Run the image as a container

- Tag the image -

docker image tag <my-image> localhost:<port>/<my-image> - Push the image to repo -

docker push localhost:<port>/<my-image> - Pull from the repo -

docker pull <IP/localhost>:<port>/<my-image>

Container Orchestration

- Tools and scripts that can help host containers in a production environment

- Create multiple containers, run this command on the manager node -

docker service create —replicas=100 <container_name> - Get started using docker swarm by having docker installed on multiple hosts and selecting one host to be the swarm manager and the rest as worker hosts

- Initialize the swarm manager -

docker swarm init- Output provides the command that workers need to run to connect to the manager

- Docker services are single apps or services that run across the swarm cluster

- Run multiple containers using k8s -

kubectl run —replicas=1000 <container_name> - Scale containers up to a specific size -

kubectl scale —replicas=2000 <container_name> - Update containers -

kubectl rolling-update <container_name> —image=<upgraded_image> - Rollback updates -

kubectl rolling-update <container_name> —rollback