Kubernetes Basics

Overview

Can scale both services and nodes aka minions (physical or virtual machine). Clusters are nodes that are combined for redundancy. The master actually has k8s installed an is responsible for the actual container orchestration on worker nodes.

K8s components:

- API server - Frontend which servers interact with (master node).

- Etcd - Key-value store that stores all data used to manage nodes; logs; managing conflicts between masters.

- Kubelet - Agent that runs on each worker node in the cluster to make sure nodes and containers are running.

- Container runtime - Underlying software used to run containers (Docker).

- Controller - ‘Brain’ that makes decisions when containers go down.

- Scheduler - Distributing work on containers across multiple nodes.

Run k8s service:

1kubectl run <name>

Get cluster info:

1kubectl cluster-info

Get k8s nodes:

1kubectl get nodes

Get detailed node description:

1kubectl describe nodes <node_name>

Delete pod:

1kubectl delete pod <pod_name>

View all created k8s objects:

1kubectl get all

Pods

Containers are encapsulated into pods (smallest object you can create in k8s). Pods usually have a 1:1 relationship with containers. But can add helper containers to the same pod which is rare. You can scale apps using different pods and create additional nodes if space runs out.

Deploy a container by creating a pod:

1kubectl run <pod_name>

2

3# If the container image isn't found locally add

4--image <image_name>

Create a deployment:

1kubectl create deployment nginx --image=nginx

2

3# Record the cause of changes

4--record

Get list of pods in a cluster with additional options (wide):

1kubectl get pods -o wide

Get pod information:

1kubectl describe pod <pod_name>

YAML

Dictionaries are unordered while lists are ordered.

1# pod-definition.yml

2

3# version of k8s api

4apiVersison: v1

5

6# type of object you want to create (replicaset, deployment, service)

7kind: Pod

8

9# pod name, labels

10metadata:

11 name: myapp-pod

12

13 labels:

14 app: myapp

15 type: frontend

16

17# pod container data

18spec:

19 containers:

20 - name: nginx-container

21 image: nginx

1# Create or update a pod using YAML

2kubectl create/apply -f <YAML_file.yml>

ReplicaSets

Replication controllers (older tech) help run multiple pods in the same cluster which provides high availability. Balances load between pods and can scale nodes. The ReplicaSet (newer) is replacing Replication Controller.

1# rc-definition.yml

2apiVersion: v1

3kind: ReplicationController

4metadata:

5 name: myapp-rc

6 labels:

7 app: myapp

8 type: frontend

9# defines what is inside the pod that you want to replicate

10spec:

11 template:

12 metadata:

13 name: myapp-pod

14 labels:

15 app: myapp

16 type: frontend

17 spec:

18 containers:

19 - name: ngxin-container

20 image: nginx

21 # number of replicas you want

22 replicas: 3

23

24# Create the Replication Controller

25# kubectl create -f rc-definition.yml

View created replication controllers:

1kubectl get replicationcontroller

Get pods that the replication controller created:

1kubectl get pods

1#replicaset-definition.yml

2

3# Make sure to use apps/v1 instead of v1 or your get:

4# Error: unable to recongize <replicaset>: no mathes for / Kind=ReplicaSet

5apiVersion: apps/v1

6kind: ReplicaSet

7metadata:

8 name: myapp-replicaset

9 labels:

10 app: myapp

11 type: frontend

12# defines what is inside the pod that you want to replicate in case a pod fails

13spec:

14 template:

15 metadata:

16 name: myapp-pod

17 labels:

18 app: myapp

19 type: frontend

20 spec:

21 containers:

22 - name: ngxinx-container

23 image: nginx

24 # number of replicas you want

25 replicas: 3

26

27 # REQUIRED: Helps RS figure out what pods fall under it

28 selector:

29 matchLabels:

30 type: frontend

Replicasets can also manage pods that weren’t part of the replica set creation. This is why selector is required for replica sets.

Create replica set using definition file:

1kubectl create -f <replicaset-def>.yml

Get replica set info:

1kubectl get replicaset

Delete replica set (this also deletes all underlying pods):

1kubectl delete replicaset <replicaset-name>

Get indepth replica set info:

1kubectl describe replicaset <replica-set-name>

If any pods fail then the replica set is responsible for deploying new ones. Replicaset is a process that monitors the pods. Labels act as filters for replica sets so that it knows which pods to monitor.

Ways to update the replicaSet to scale to a different number of replicas:

- Update the RS definition.yml file

- Update RS

1kubectl replace -f <rs-def>.yml

Update using scale command (this doesn’t automatically update the definition file):

1kubectl scale —replicas=6 -f <rs-def>.yml

Alternative format:

1kubectl scale —replicas=6 replicaset <replicaset-name>

Edit running replica set configuration. Not the actual file you created; k8s creates it in memory.

1kubectl edit replicaset <rs-name>

Deployments

Used for rolling updates, with rollbacks, automatically pulling and updating images, pause and resume changes. Deployments are higher up than replica sets (deployments encapsulate replica sets). ReplicaSets are automatically created in deployments.

1# deployment-definition.yml

2

3apiVersion: apps/v1

4kind: Deployment

5metadata:

6 name: myapp-deployment

7 labels:

8 app: myapp

9 type: frontend

10# defines what is inside the pod that you want to replicate in case a pod fails

11spec:

12 template:

13 metadata:

14 name: myapp-pod

15 labels:

16 app: myapp

17 type: frontend

18 spec:

19 containers:

20 - name: ngxinx-container

21 image: nginx

22 # number of replicas you want

23 replicas: 3

24

25 # REQUIRED: Helps deployment to figure out what pods fall under it

26 selector:

27 matchLabels:

28 type: frontend

Get deployment info:

1kubectl get deployments

Describe deployment info:

1kubectl describe deployments <deployment_name>

Updates and Rollbacks

Every rollout creates a new deployment revision number, which allows rollback.

There are 2 types of deployment strategies:

- Destroy the instances and deploy new instances.

- App goes down between so not recommended (Recreate strategy).

- Rolling update to take 1 pod down and bring 1 back up at a time.

- Default strategy.

Get rollout status:

1 kubectl rollout status deployment/<deployment_name>

Get rollout history:

1kubectl rollout history deployment/<deployment_name>

Rollback updates:

1kubectl rollout undo deployment/<deployment_name>

Edit running deployment configuration:

- Not the actual file you created; k8s creates it in memory.

1kubectl edit deployment <deployment-name>

Networking

IP address is assigned to a pod. Cluster networking on multiple nodes require the ability to communicate between all nodes/containers without requiring NAT.

Services

Helps connect containers together for users (frontend, backend, db) with loose coupling. K8S service maps connection from user > node > container.

- Listen on a port and forward requests to the pods (nodeport).

Services extend across all nodes in the cluster automatically.

- ClusterIP service for communication between frontend & backend services.

- Loadbalancer to distribute load using a Random Algorithm.

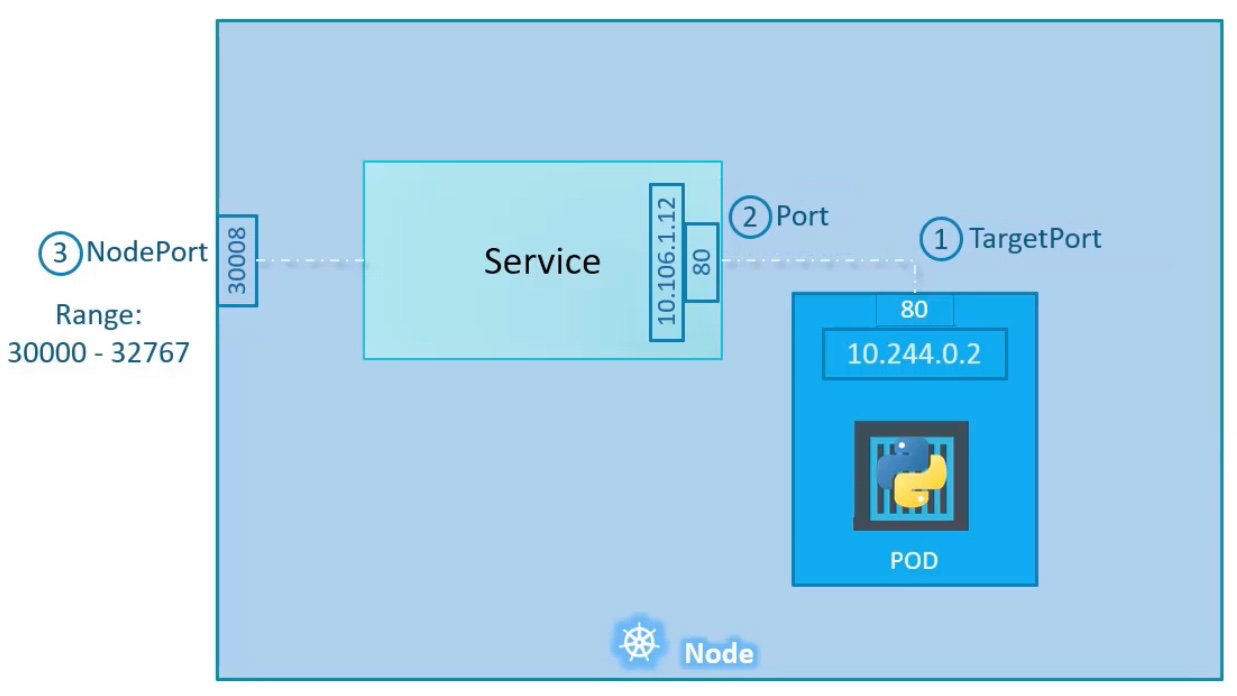

3 ports in NodePort:

- Pod - uses

TargetPortbecause that’s where the service forwards requests to. - Service - Port.

- Node - NodePort is used to access the port remotely .

1# service-definition.yml

2

3apiVersion: v1

4kind: Service

5metadata:

6 name: myapp-service

7# Port information

8spec:

9 type: NodePort

10 ports:

11 - targetPort: 80 # port that user's requests are forwarded to from nodePort

12 port: 80

13 nodePort: 30008 # Port the user accesses

14# Link ports to the pod (from metadata:)

15 selector:

16 app: myapp

17 type: front-end

If you don’t provide a targetPort it’s assumed to be the same as port.

If you don’t provide a nodePort it’s automatically allocated.

View created services:

1kubectl get services

Get URL of the service:

1kubectl service <service_name> —url

Use ClusterIPs to establish communication between different tiers of pods of the application.

1# service-definition.yml

2apiVersion: v1

3kind: Service

4metadata:

5 name: back-end

6spec:

7 type: ClusterIP # default type

8 ports:

9 - targetPort: 80 # pod port

10 port: 80 # service port

11 selector:

12 app: myapp

13 type: back-end

Some cloud providers have capability of natively integrating load balancing with k8s. Unsupported environments have the same affect as setting NodePort.

1# service-definition.yml

2apiVersion: v1

3kind: Service

4metadata:

5 name: myapp-service

6spec:

7 type: LoadBalancer

8 ports:

9 - targetPort: 80 # pod port

10 port: 80 # service port

11 nodePort: 30009

Microservices Architecture

Make one pod accessible by another pod using a service. Services are only required if there is something that a pod has that another pod needs to access.

- Deploy pods.

- Create services (clusterIPs) - connections between pods.

- Create services (nodePort) - external access for pods that require it.

containerPort- port that pod wants to open (Pod YAML file).- Deployments also require services if the pods inside the services require connections to other pods/deployments.

KaaS (in the Cloud)

Master nodes are typically managed by the provider and changes on it aren’t possible. Usually NodePort file types are transformed into LoadBalancer file types.